-

New Feature

New Feature

-

Resolution: Done

-

Major

-

None

-

All

-

GreenHopper Ranking:0|i1twub:

-

9223372036854775807

-

Small

In studio we have a full described schema :

Currently, studio schema can be binded toa a TCK connector's property with @Structure annotation. But, only the columns'name are given to the connector at runtime. It means that, even if use take care to fullfill the schema definition of the connector in the studio, all that information will be ignored.

Example:

This option List<String> will be set with all columns name from the studio schema.

This is due to compatibility with pipeline designer that doesn't provide such described schema.

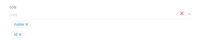

In pipeline designer, the same option will be currently rendered as a list of multiselected lables, in which you can also create new entries:

It is only labels, that's why we only retrieve columns name.

The TCK / studio integration configure the connector with defined schema entries:

configuration_tSwisscomInput_1.put("configuration.dataset.cols[0]", "name");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1]", "solde");

configuration.dataset.cols is a list of String so it is ok.

If we want to enhance this mecanism it should be possible to let developer to define not a list of String, but a list of pojo. Then integration will set schema attribute to the pojo if it match schema attribute name.

For example, in a connector we could have :

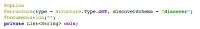

@Option

@Structure(type = Structure.Type.OUT, discoverSchema = "discover")

@Documentation("")

private List<MyRec> rec;

@Data

public class MyRec {

public String name;

public String dbname;

public String dbtype;

public String datepattern;

}

In studio context, we know taht the rec option will be binded to studio schema. The rec option is a list of MyRec that contains several attributes. Those attributes match studio schema ones. And so, in the generated code we could have :

configuration_tSwisscomInput_1.put("configuration.dataset.cols[0].name", "name");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[0].dbname", "#1_name");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[0].dbtype", "VARCHAR");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[0].datepattern", "");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1].name", "solde");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1].dbname", "#2_solde");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1].dbtype", "FLOAT");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1].datepattern", "");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1].name", "birthdate");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1].dbname", "#2_birthdate");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1].dbtype", "DATE");

configuration_tSwisscomInput_1.put("configuration.dataset.cols[1].datepattern", "mm/dd/YYYY");

In pipeline designer context, we will not have anymore a Simple list of String, but a list of object as we can already for some other configuration.

- is duplicated by

-

TCOMP-1760

Support of studio schema DB name

TCOMP-1760

Support of studio schema DB name

-

- Rejected

-

-

TCOMP-1754

Ability to set a date pattern during the Schema creation

TCOMP-1754

Ability to set a date pattern during the Schema creation

-

- Rejected

-

-

TCOMP-1755

Field names in Siebel to allow blank and other special characters

TCOMP-1755

Field names in Siebel to allow blank and other special characters

-

- Rejected

-